AWS Simple Storage Service (S3) is an object-based storage service built to store and retrieve any amount of data from anywhere on the web.

Before starting our hands-on with S3 please refer to our previous blog (if not yet) where we have discussed S3 basics in detail.

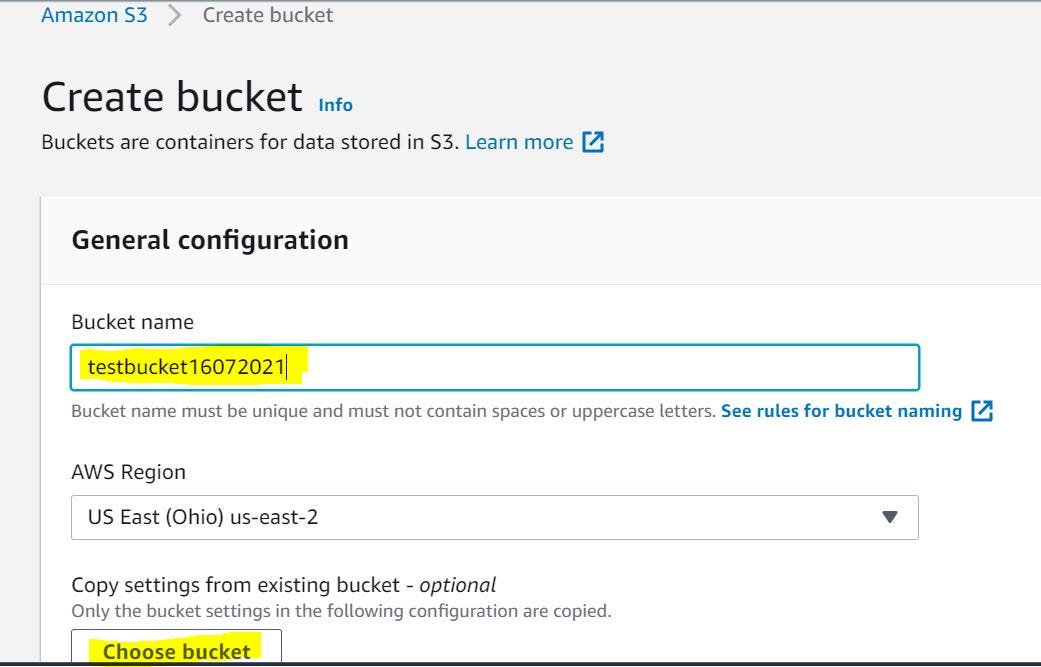

Let us straight away look at how to create an S3 bucket and upload our data into it by using the AWS management console.

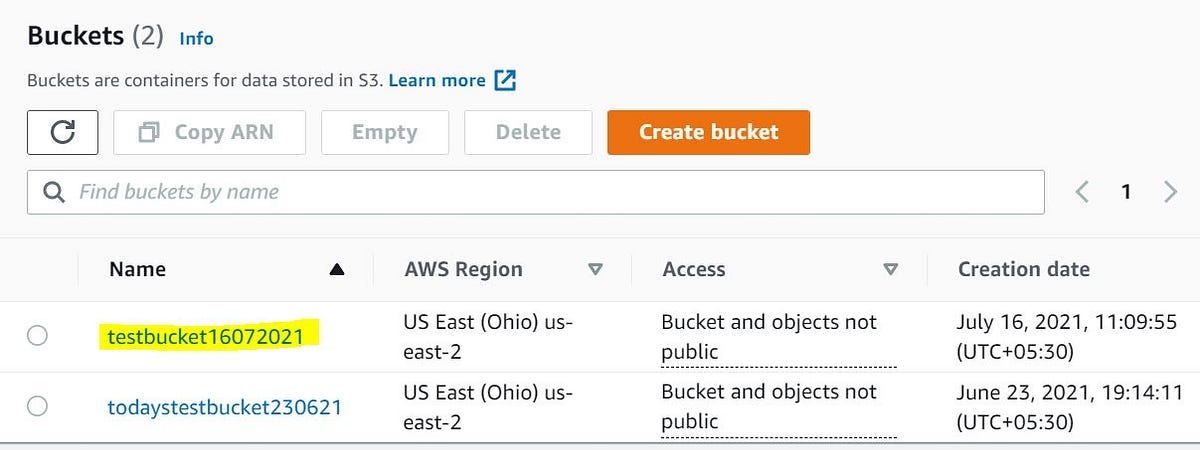

- Log into console and go to S3 service, click on Create bucket. Give a unique name to your bucket (bucket names must be unique across AWS).

2. Block public access to your bucket by selecting Block Public access checkbox so that no outsider can access your data in the bucket. Select Tag as per the need and lastly click on Create bucket after which your bucket will be seen under Bucket list.

3. Upload your files by clicking on the Upload icon and select required Files or Folders. In order to view your S3 data through the internet make sure you have allowed Public access.

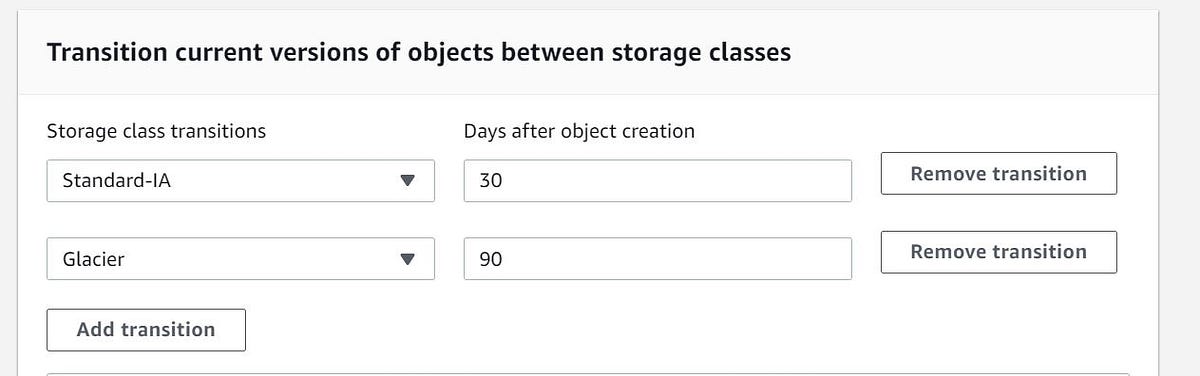

4. We can minimize the S3 cost by defining the lifecycle policy and storing the data in the best-fit storage class. In the below snip I have defined a rule such that my data would flow from S3 Standard to Standard IA after 30 days and will be moved to S3 Glacier after 90 days.

AWS S3 file/object size limit – Individual Amazon S3 objects can range in size from a minimum of 0 bytes to a maximum of 5 terabytes. The largest object that can be uploaded in a single PUT is 5 gigabytes. For objects larger than 100 megabytes, customers should consider using the Multipart upload capability.

AWS CloudTrail Data events – Amazon S3 is integrated with AWS CloudTrail, a service that provides a record of actions taken by a user, role, or an AWS service in Amazon S3. CloudTrail captures a subset of API calls for Amazon S3 as events, including calls from the Amazon S3 console and code calls to the Amazon S3 APIs.

Using the information collected by CloudTrail, you can determine the request that was made to Amazon S3, the IP address from which the request was made, who made the request, when it was made, and additional details.

Event Notification in S3 – is the feature that sends a notification message to a destination whenever the events occur. Before you can enable event notifications for your bucket, you must set up one of the destination types and then configure permissions.

Bucket versioning – Versioning is a means of keeping multiple variants of an object in the same bucket. You can use versioning to preserve, retrieve, and restore every version of every object stored in your Amazon S3 bucket. With versioning, you can easily recover from both unintended user actions and application failures.

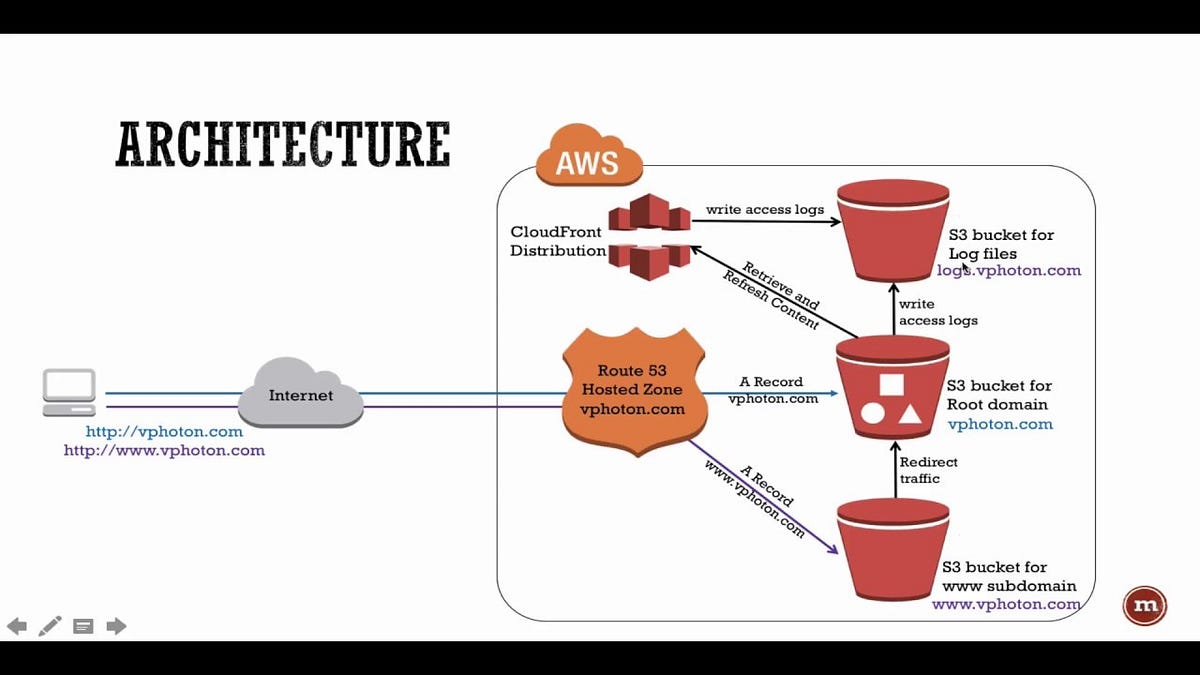

How to Host a Static Website using AWS S3.

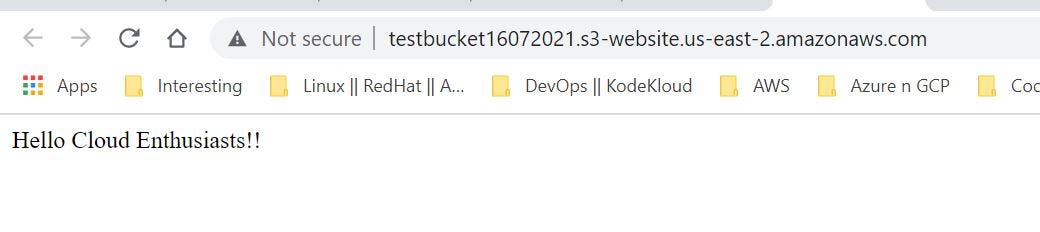

- In the above bucket go to Properties > Edit > Enable Static website hosting > Enter “index.html” for Index document and “error.html” for Error document.

- Upload the sample Index and Error HTML document into the same bucket.

- Edit public access settings to allow.

- Test your website by referring to the endpoint. Below is the example setup we made.

AWS S3 Bucket Policies

Bucket Policies define the set of permissions like who can access the bucket and what type of actions can be performed in it.

The below policy gives anonymous users read access to our above bucket.

— — — — — — — — — — — — — — — — — — — — –

{

“Version”: “2012-10-17”,

“Statement”: [

{

“Sid”: “PublicRead”,

“Effect”: “Allow”,

“Principal”: “*”,

“Action”: [

“s3:GetObject”,

“s3:GetObjectVersion”

],

“Resource”: [

“arn:aws:s3:::testbucket16072021/*”

]

}

]

}

— — — — — — — — — — — — — — — — — — — —

AWS S3 CLI

You can manage your S3 service by using CLI tool in a more easy and faster way.

Few CLI commands are listed below:

- Create an S3 bucket — — — — “aws s3 mb s3://<<bucket-name>>”

- List all existing buckets — — — “aws s3 ls”

- List all objects in a bucket — — “aws s3 ls s3://<<bucket-name>>”

AWS S3 Pricing

Pay only for what you use. There is no minimum fee. There are six Amazon S3 cost components to consider when storing and managing your data – storage pricing, request and data retrieval pricing, data transfer and transfer acceleration pricing, data management, and analytics pricing, and the price to process your data with S3 Object Lambda.